Online media is currently grappling with a crisis characterized by diminishing trust, the widespread dissemination of misinformation, and the alarming proliferation of fake news and experiences. This article delves into the challenges plaguing the digital media landscape and proposes the adoption of biometric technology as a potential solution. Biometrics, with its capabilities in identity verification, content authentication, mitigation of bots and Sybil attacks, and creation of personalized user experiences, offers unique advantages to restore trust, combat misinformation, and establish a secure online media ecosystem. This article outlines the potential benefits and considerations associated with integrating biometric solutions within the digital media realm.

Biometrics: A Beacon of Trust in the Digital Media Crisis (humanode.io)

The contemporary media landscape is characterized by hybridization (Chadwick, 2013), porosity (Carlson & Lewis, 2015), and technological saturation (Harambam et al., 2018). It is grappling with a severe crisis characterized by the proliferation of misinformation, a decline in public trust, and the rampant spread of fake news, largely fueled by social media platforms. The accessibility and rapid sharing of information have compromised the integrity and reliability of media content, posing significant challenges to society. This crisis undermines the public’s ability to make informed decisions, erodes democratic processes, and fosters division among individuals and communities.

The issue of trust in media has generated a substantial body of research that has investigated the causes and effects of trust in media (Engelke et al., 2019; Kiousis, 2001; Ladd, 2012; Prochazka and Schweiger, 2019; Tsfati and Ariely, 2014), measured and understood trust dynamics (Fink, 2019; Flew, 2019; Ireton, 2018; Kalogeropoulos et al., 2019; Park et al., 2020; Peters and Broersma, 2013; Tsfati and Ariely, 2014; Usher, 2018). Recently published systematic reviews explored specific types of online misinformation, particularly fake news, their characteristics and impact (Baptista & Gradim, 2020; Celliers & Hattingh, 2020; Di Domenico, Sit, et al., 2021; Pennycook & Rand, 2021). Detection methods for identifying and countering misinformation, including both human-generated and AI-generated content (Kolo et al, 2022; Chan-Olmsted S. and Shin J., 2023), have also been extensively examined in the literature (Sharma et al., 2019; Zhang & Ghorbani, 2020). The fusion of traditional and digital media, the permeability of information across various platforms, and the pervasive influence of technology have tested the media by transforming its environment in unprecedented ways.

Today, social networks are a key contributor to online awareness, and trust in them as a source of information is predicted to rapidly grow (Kolomeets and Chechulin, 2021), posing the lack of trust in media and journalism as a “challenge” (Fink, 2019) and leading to an industry “crisis” (Moran and Nechushtai, 2022). Social media platforms as a phenomenon have reshaped the structure, dimensions, and complexity of news dissemination (Berkowitz & Schwartz, 2016; Copeland, 2007; Kim & Lyon, 2014). The widespread use of them has accelerated misinformation dissemination, enabling individuals to generate and share false information quickly and anonymously (Del Vicario et al., 2016). Major social media platforms serve as spaces for social interaction, communication, and entertainment (Hwang et al., 2011; Kuem et al., 2017) while being significant channels for the broad sharing of information and news (Vosoughi et al., 2018), not necessarily true or relevant.

On top of that, the online media landscape has become a breeding ground for Sybil attacks (Douceur, 2002) with the utilization of multiple fake identities and social media bots (Aldayel, Magdy 2022; Cai, Li, Zengi, 2017). Such bots, automated accounts controlled by algorithms or humans, have been weaponized to influence search algorithms, amplify and spread false narratives, manipulate public opinion, and create artificial trends (Shao et al., 2017), rumors and conspiracy theories (Ferrara, 2020), create fake reputations, suppress political competitors (Pierri et al., 2020; Benkler et al., 2017), and even affect presidential election (Cresci et al., 2017; Mahesh, 2020; Sedhai, Sun, 2015). This manipulation has led to biased information flow, favoring specific perspectives, products, or organizations, and hindering users’ access to accurate and diverse information. In recent years, researchers have dedicated a significant amount of attention to social media bot detection (Aljabri et al., 2023; Ali, Syed, 2022; Ferrara, 2018; Rangel, Rosso, 2019; Yang et al., 2012) and prevention (Thakur, Breslin, 2021; Kavazi et al., 2021), and defense (Al-Qurishi et al., 2017).

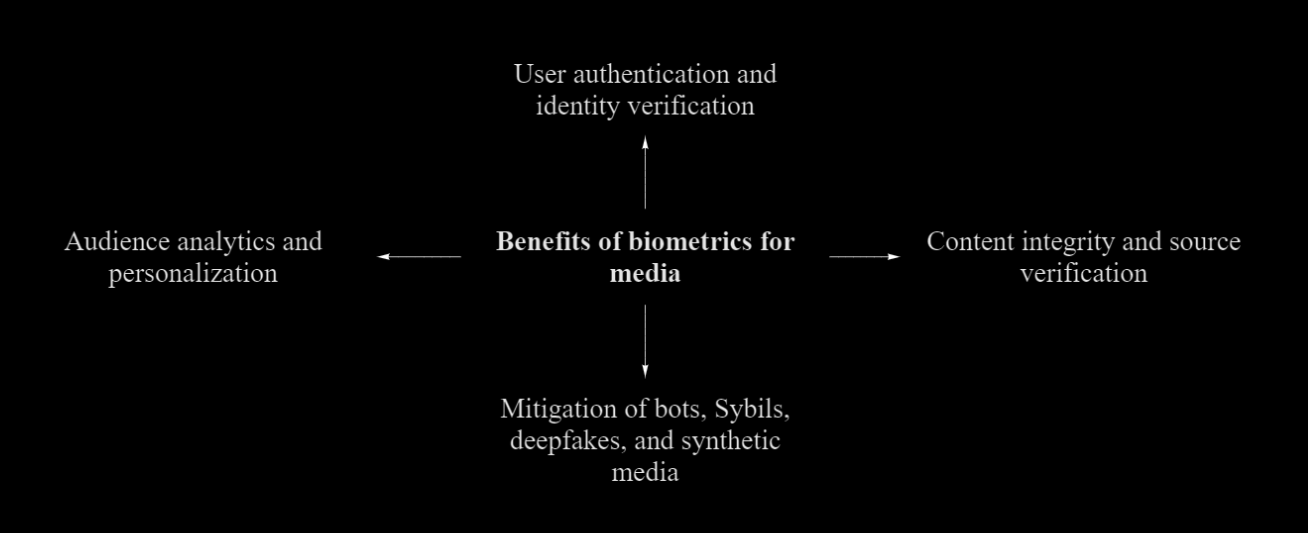

In order to address this crisis, it is imperative for the media to seek innovative alternative solutions that have the potential to restore trust, promote authenticity, and ensure the delivery of accurate and reliable information to the public. We believe that the solution lies in the utilization of biometric technology, which offers unique capabilities in verifying identity and authenticating user interactions, ensuring content integrity, and enhancing user experiences.

Unlocking the power of biometrics for media

Biometrics, the science of recognizing and verifying individuals based on their unique physiological or behavioral characteristics, has rapidly advanced in recent years (Kaur and Verma, 2014; Venkatesan and Senthamaraikannan, 2018; Choudhury et al., 2018; Raju and Udayashankara, 2018.). Significantly enhancing the accuracy, efficiency, and reliability of biometric systems, the introduction of machine learning techniques, such as deep learning and convolutional neural networks (Cherrat et al., 2020; Jin, 2022), has revolutionized biometric recognition algorithms. The benefits of biometrics for identity verification have expanded. Flexible and user-friendly biometric verification provides a high degree of authenticity, it can be done quickly and effortlessly, reducing the time and effort required, and enhancing security measures, particularly in high-risk environments, by providing an extra layer of authentication. Still, while biometrics can offer effectiveness and reliability, it is crucial to acknowledge the challenges it presents like privacy concerns, algorithm biases, adoption rate, and integration complexity, as well as susceptibility to potential attacks. Striking the right balance between security, privacy, and usability is of utmost importance to ensure the responsible and ethical utilization of biometric technologies. This approach is vital in effectively addressing the aforementioned threats within the media landscape.

With biometric technologies, media organizations can enhance authentication, verification, and identification processes, thereby ensuring the integrity and reliability of information disseminated through digital platforms.

First, biometrics can play a crucial role in ensuring the credibility of users on online platforms. By requiring individuals to authenticate themselves using their unique biometric traits, media organizations can establish a higher level of confidence in user identities, reducing the risk of impersonation, and unauthorized access. This authentication process can enhance security and contribute to a more trustworthy media environment.

Second, biometrics can assist in verifying the authenticity and integrity of media content, including images, videos, and audio recordings. By embedding biometric markers during the creation or capture of content, media organizations can establish a verifiable link between the content and its original source, reinforcing the credibility of information.

Biometrics can play a vital role in combating bots and Sybil attacks within the media landscape. Biometric analysis can aid in differentiating between human users and automated accounts, distinguishing real content from synthetic media.

In the context of combating Sybil attacks, liveness detection (Raheem et al., 2019) can play a significant role in verifying that the captured biometric traits belong to a live human user and not a manipulated or fake representation. It is worth noting, that the implementation of continuous authentication can help monitor user behavior and detect any suspicious activities in real time and multimodal biometric fusion increases the reliability of detection systems and reduces the chances of false positives or false negatives (Cherrat et al., 2020; Raju, Udayashankara, 2018).

On top of that, biometrics can enable media organizations to gain insights into audience engagement and preferences. Media platforms can measure emotional reactions, identify engagement levels, and tailor content delivery to individual preferences by analyzing biometric data such as facial expressions, gaze patterns, or physiological responses. This personalized approach enhances user experience, improves content relevance, and fosters deeper audience engagement.

Humanode: Pioneering trust and authenticity in the digital environment

Humanode is a pioneering project at the forefront of digital identity verification within decentralized environments. Particularly in the realm of media. Employing state-of-the-art cryptography, blockchain technology, and biometrics, Humanode aims to combat Sybil attacks and ensure the authenticity of human identities (Kavazi et al., 2021).

Central to Humanode’s mission is the use of biometric authentication and verification processes to link each user account on platforms (including media ones) to a unique and verifiable human identity. Within the media realm, by leveraging liveness detection, the project strives to eradicate automated accounts and establish a trustworthy space for both content creation and consumption.

Furthermore, Humanode places a strong emphasis on user privacy and security, fostering a transparent and accountable environment that promotes responsible behavior among users. This commitment not only enhances trust but also encourages genuine engagement and interactions.

An important milestone for the Humanode project occurred in 2023 with the introduction of the BotBasher. This tool has been successfully deployed in numerous Discord servers, authenticating hundreds of thousands of verified unique users (at the time of writing 571 communities have already adopted BotBasher with 415,506 biometric verifications). This implementation marks just the beginning of Humanode’s journey toward creating a more secure and authentic digital landscape. For media platforms specifically.

The next strategic leap taken by the team involved the development of Biomapper, a groundbreaking solution designed to bolster the security of digital identities. In essence, Biomapper, or private on-chain Biomapping, empowers users to validate their identity by scanning their biometrics, affirming the presence of a live and distinct human behind the paired Humanode EVM address (EVM wallet address). Importantly, this process ensures the utmost privacy of the actual biometric data, which remains securely encrypted within Confidential Virtual Machines (CVMs). Analogous to zero-knowledge proofs (ZKPs), Biomapper allows the system to verify the authenticity of the information without accessing or storing specific biometric details. This innovative approach holds immense potential for enhancing security and trust on crypto media platforms, providing users with a seamless and privacy-centric method to assert their unique identity while safeguarding sensitive biometric information.

Conclusion

Biometric technology offers unique advantages in restoring trust in media. Robust biometric authentication systems enable media organizations to verify the authenticity of users, thereby mitigating risks such as impersonation and identity theft. Integrating biometric traits as authentication factors, an additional layer of security is added, instilling confidence in users regarding the reliability and credibility of the information they consume and share. Furthermore, biometrics can effectively combat the influence of social media bots and Sybil attacks by differentiating between human users and automated accounts, thereby enhancing the quality and integrity of user-generated content.

It is important to recognize that biometrics alone cannot single-handedly solve the crisis in media. It should be part of a holistic approach that incorporates education, media literacy, and transparency in journalistic practices.

References

- Aldayel, A. and Magdy, W. (2022) Characterizing the role of bots’ in polarized stance on social media. Soc Netw Anal Mining.

- Aljabri, M., Zagrouba, R., Shaahid, A. et al. (2023) Machine learning-based social media bot detection: a comprehensive literature review. Soc. Netw. Anal. Min.

- Chan-Olmsted S., Shin, J. (2023) User Perceptions and Trust of Explainable Machine Learning Fake News Detectors. Journal of Communication, Vol. 17.

- Cherrat E, Alaoui R, Bouzahir H. (2020) Convolutional neural networks approach for multimodal biometric identification system using the fusion of fingerprint, finger-vein and face images. PeerJ Computer Science 6:e248.

- Cresci S, Spognardi A, Petrocchi M, Tesconi M, di Pietro R (2017) The paradigm-shift of social spambots: evidence, theories, and tools for the arms race. In: 26th international world wide web conference 2017, WWW 2017 companion.

- Del Vicario, M., Bessi, A., Zollo, F., Petroni, F., Scala, A., Caldarelli, G., Stanley, H. E., & Quattrociocchi, W. (2016). The spreading of misinformation online. Proceedings of the National Academy of Sciences, 113(3), 554–559.

- Di Domenico, Giandomenico , J. Sit, A. Ishizaka, D. Nunan (2021) Fake news, social media and marketing: A systematic review. Journal of Business Research, Volume 124. Pp 329–341. ISSN 0148–2963.

- Douceur, J.R. (2002). The Sybil Attack. In: Druschel, P., Kaashoek, F., Rowstron, A. (eds) Peer-to-Peer Systems. IPTPS 2002. Lecture Notes in Computer Science, vol 2429. Springer, Berlin, Heidelberg.

- Engelke K.M, Hase V., Wintterlin F. (2019) On measuring trust and distrust in journalism: Reflection of the status quo and suggestions for the road ahead. Journal of Trust Research 9(1): 66–86.

- Ferrara, E. (2018). Measuring Social Spam and the Effect of Bots on Information Diffusion in Social Media. Computational Social Sciences, 229–255.

- Fink, K. 2019. The Biggest Challenge Facing Journalism: A Lack of Trust. Journalism 20 (1): 40–43. doi:10.1177/1464884918807069

- Jin J. (2022) Convolutional Neural Networks for Biometrics Applications. SHS Web Conf., 144 (2022) 03013.

- Kaur G. and C. K. Verma (2014) Comparative analysis of biometric modalities, International Journal of Advanced Research in Computer Science.

- Kavazi D., Smirnov V., Shilina S., MOZGIII, MingDong Li, Contreras R., Gajera H., Lavrenov D. (2021) Humanode. Whitepaper v. 0.9.6 “You are [not] a bot”. https://arxiv.org/pdf/2111.13189.pdf

- Kolomeets M, Chechulin A (2021) Analysis of the malicious bots market. In: Conference of open innovation association, FRUCT.

- Kolo C., Mütterlein J., Schmid S. A. (2022) Believing Journalists, AI, or Fake News: The Role of Trust in Media. Proceedings of the 55th Hawaii International Conference on System Sciences.

- Park, S., C. Fisher, T. Flew, and U. Dulleck (2020) Global Mistrust in News: The Impact of Social Media on Trust. International Journal on Media Management 22 (2).

- Pennycook, G., & Rand, D. G. (2021). The psychology of fake news. Trends in Cognitive Sciences, 25(5), 388–402.

- Prochazka F, Schweiger W (2019) How to measure generalized trust in news media? An adaptation and test of scales. Communication Methods and Measures 13(1): 26–42.

- Raheem, E. A., Ahmad, S. M. S., & Adnan, W. A. W. (2019). Insight on face liveness detection: A systematic literature review. International Journal of Electrical and Computer Engineering, 9(6), 5865.

- Shao C, Ciampaglia GL, Varol O, Yang K, Flammini A, Menczer F (2017) The spread of low-credibility content by social bots. Nat Commun.

- Thakur S, Breslin JG (2021) Rumour prevention in social networks with layer 2 blockchains. Soc Netw Anal Mining.

- Usher, N. (2018) Re-thinking Trust in the News: A Material Approach Through “Objects of Journalism”. Journalism Studies 19 (4): 564–578.

- Vosoughi, S., Roy, D., & Aral, S. (2018). The spread of true and false news online. Science, 359(6380), 1146–1151.

- Zhang X., Ali A. Ghorbani (2020) An overview of online fake news: Characterization, detection, and discussion. Information Processing & Management. Vol. 57, Iss.2. ISSN 0306–4573.